How to Identify Bottlenecks in Scaling Servers

8 min read - September 22, 2025

Learn how to identify and fix performance bottlenecks in server scaling to enhance user experience and optimize resource usage.

How to Identify Bottlenecks in Scaling Servers

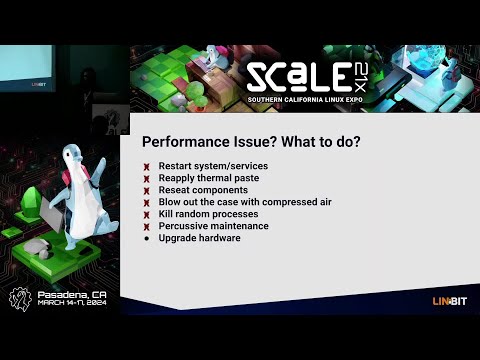

Scaling servers isn’t just about adding resources - it’s about finding and fixing bottlenecks that limit performance. These bottlenecks can cause delays, crashes, and poor user experiences, even with upgraded hardware. To address this, focus on:

- Baseline Metrics: Measure CPU usage, memory, disk I/O, network throughput, and response times under normal conditions.

- Monitoring Tools: Use platforms like New Relic, Grafana, and JMeter to track performance and simulate traffic.

- Testing: Perform load and stress tests to identify breaking points.

- Analysis: Examine logs, resource usage, and database performance to pinpoint inefficiencies.

- Fixes: Optimize code, upgrade hardware (e.g., SSDs), and implement horizontal scaling where necessary.

Diagnosing Performance Bottlenecks in Production Systems

Setting Up Performance Baselines

Having baseline data is crucial for identifying whether changes in server performance are routine fluctuations or actual bottlenecks. Baselines provide a reference point, making it easier to spot deviations from typical server behavior.

To create accurate baselines, gather performance data that reflects normal daily and weekly traffic patterns.

Key Metrics to Track

Tracking the right metrics is essential for identifying performance issues early.

- CPU utilization: This shows how much processing power your server is using at any moment. While acceptable ranges depend on your specific setup, monitoring CPU usage can reveal when your system is either overburdened or underutilized.

- Memory utilization: This tracks the amount of RAM your applications are consuming. Prolonged high memory usage can force the system to rely on slower disk-based swap space, significantly slowing performance.

- Disk I/O metrics: These measure how efficiently your storage reads and writes data. Key metrics include IOPS (Input/Output Operations Per Second) and disk latency. For example, traditional hard drives typically achieve 100–200 IOPS with 10–15 milliseconds latency, while NVMe SSDs can deliver much higher IOPS with sub-millisecond latency.

- Network throughput: This measures data transfer rates in Mbps or Gbps. Monitoring both incoming and outgoing bandwidth, along with packet loss rates, is vital. Packet loss exceeding 0.1% often indicates network congestion or hardware issues.

- Response times: Response times reflect how quickly your applications handle requests. For web applications, response times within a few hundred milliseconds are ideal. Research from Google highlights that mobile pages taking three or more seconds to load experience a 53% abandonment rate.

- Application-specific metrics: These vary depending on your software stack but may include database query times, cache hit rates, or active connection counts. For example, fast database queries and high cache hit rates are essential for maintaining strong overall performance.

Regularly monitoring these metrics ensures you can address performance issues before scaling becomes necessary.

Benchmarking and Recording Data

To establish reliable baselines, run your servers under normal production loads for at least two weeks. Record data at regular intervals - every 5–10 minutes is a good balance between detail and storage efficiency.

Peak load benchmarking is also important. Measure how your system performs during its busiest traffic periods to anticipate future scaling needs.

When documenting baseline data, include timestamps, metric values, and relevant context. This detailed record will help you compare performance before and after scaling efforts.

Uptime measurements are another critical component. For example:

- 99% uptime equals roughly 7 hours of downtime per month.

- 99.9% uptime reduces downtime to about 45 minutes per month.

- The gold standard, 99.999% uptime (Five Nines), allows for just 30 seconds of downtime monthly.

You might also consider using Apdex scoring to gauge user satisfaction with response times. This score ranges from 0 (poor) to 1 (excellent) by categorizing response times into satisfied, tolerating, and frustrated zones. A score above 0.85 generally indicates a positive user experience.

Store your baseline data in a centralized system for easy access and comparison. Time-series databases or monitoring platforms are commonly used to retain historical data, making it simpler to determine whether performance changes are due to scaling or underlying system issues.

With these baselines in place, you're ready to move on to real-time performance monitoring tools and techniques.

Monitoring and Analysis Tools

The right monitoring tools can transform raw data into actionable insights, helping you detect bottlenecks before they disrupt user experiences. With a variety of features like real-time alerts and in-depth performance analysis, choosing the right tools becomes essential for identifying and resolving issues effectively.

Core Monitoring Tools

Application Performance Monitoring (APM) platforms such as New Relic are indispensable for tracking application metrics and user experiences. These tools automatically capture key data like response times, error rates, and transaction traces. Features like distributed tracing make it easier to pinpoint slow database queries or sluggish API calls.

Grafana is a versatile visualization tool that integrates with multiple data sources. When paired with time-series databases like Prometheus or InfluxDB, Grafana excels at creating dashboards that link metrics - such as correlating CPU spikes with slower response times - making it easier to spot performance issues at a glance.

Apache JMeter is a load-testing tool that actively simulates user traffic to measure how systems handle concurrent users. By generating traffic and testing server throughput under various conditions, JMeter helps identify breaking points and resource limitations before they impact production environments.

The ELK Stack (Elasticsearch, Logstash, and Kibana) focuses on log analysis and search capabilities. Logstash gathers and processes log data, Elasticsearch makes it searchable, and Kibana visualizes the results. This combination is ideal for identifying error patterns, tracking event frequencies, and linking logs to performance drops.

System-level monitoring tools like Nagios, Zabbix, and Datadog provide a bird’s-eye view of infrastructure metrics. These platforms monitor critical hardware data like CPU usage, memory consumption, disk I/O, and network traffic, making them essential for detecting hardware-related bottlenecks and planning capacity upgrades.

Database monitoring tools such as pgAdmin for PostgreSQL or MySQL Enterprise Monitor offer specialized insights into database performance. These tools track metrics like query execution times, lock contention, and buffer pool usage - details that general-purpose monitors might overlook but are crucial for optimizing database performance.

Each type of tool serves a unique purpose: APM tools focus on application performance, system monitors handle hardware metrics, and database tools specialize in storage and query analysis. Many organizations use a mix of these tools to cover their entire tech stack, ensuring both immediate problem-solving and long-term performance optimization.

Real-Time vs. Historical Data

Real-time monitoring delivers up-to-the-second visibility into system performance, allowing teams to respond quickly to emerging issues. Dashboards refresh every few seconds, displaying live metrics like CPU usage, active connections, and response times. This is critical for catching sudden traffic surges, memory leaks, or failing components before they spiral into larger problems.

Real-time alerts are triggered when metrics cross predefined thresholds - such as CPU usage exceeding 80% or response times surpassing 2 seconds. These alerts enable teams to address problems within minutes, minimizing downtime.

Historical data analysis, on the other hand, uncovers long-term trends and recurring patterns that real-time monitoring might miss. By examining data over weeks or months, teams can identify seasonal traffic fluctuations, gradual performance declines, or recurring bottlenecks. For example, a 15% increase in database query times over three months could signal growing data volumes or inefficient queries that need optimization.

Historical analysis also supports capacity planning. Trends like increasing memory usage or escalating traffic volumes help predict when resources will reach their limits, enabling proactive scaling or upgrades.

Combining both approaches creates a well-rounded monitoring strategy. Real-time data provides immediate feedback for crisis management, while historical analysis informs strategic decisions to prevent future issues. Many modern tools seamlessly integrate both, offering real-time dashboards alongside historical data storage, so teams can switch effortlessly between short-term troubleshooting and long-term planning.

The best results come when teams routinely review real-time alerts to address immediate concerns and analyze historical trends for smarter scaling and optimization decisions. This dual approach ensures systems remain efficient and resilient over time.

How to Find Bottlenecks Step by Step

Once you’ve established baseline metrics and set up monitoring tools, the next step is to zero in on bottlenecks. This involves systematically testing, monitoring, and analyzing your system under load to identify where performance issues arise.

Load and Stress Testing

Load testing helps you evaluate how your system performs under typical user demand. Start by defining your performance goals, such as acceptable response times, throughput targets, and error rate thresholds. These goals act as benchmarks to spot deviations. Tools like JMeter or Gatling can simulate traffic and gradually increase the load until performance starts to degrade.

Stress testing, on the other hand, pushes the system beyond its normal limits to reveal breaking points. During both tests, keep an eye on metrics like CPU usage, memory consumption, and network bandwidth. For example, CPU usage nearing 100%, memory spikes, or maxed-out bandwidth often correlate with slower response times or higher error rates.

Real user monitoring (RUM) can complement these synthetic tests by providing data on actual user experiences. This can uncover bottlenecks that controlled tests might miss.

The next step is to analyze resource usage to pinpoint the root causes of performance issues.

Resource Analysis

Compare resource usage data against your baseline metrics to uncover hidden constraints. Here’s what to look for:

- CPU: Bottlenecks often occur when usage consistently exceeds 80% or spikes unexpectedly.

- Memory: High or erratic usage might indicate memory leaks or inefficiencies.

- Disk I/O: Monitor for high utilization or long wait times, which can slow down operations.

- Network: Check bandwidth usage and latency to identify slow API responses or timeouts.

- Database Performance: Use tools like MySQL Workbench or SQL Profiler to analyze query execution times, indexing, and transaction locking. Queries taking longer than 100 milliseconds could indicate inefficient operations, like row-by-row processing (RBAR), that need optimization.

Log and Trace Analysis

Logs and traces provide critical insights when combined with baseline and real-time metrics. Logs can highlight recurring errors, timeouts, or resource warnings that signal bottlenecks. For example, timeout messages or errors related to resource limits often point directly to problem areas.

Distributed tracing tools like OpenTelemetry with Jaeger allow you to track a request’s journey across microservices, revealing delays caused by slow database queries, API timeouts, or problematic service dependencies. Detailed instrumentation, such as logging operation start and end times, can help identify code sections that consume excessive resources. Similarly, database query logs can expose inefficiencies like RBAR operations.

Thread contention is another area worth examining. Analyzing thread dumps can uncover deadlocks, thread starvation, or excessive context switching, all of which can drag down performance. Capturing stack trace snapshots during performance spikes can further pinpoint the exact code paths causing delays.

Between March and November 2020, Miro experienced a sevenfold increase in usage, reaching over 600,000 unique users per day. To address server bottlenecks during this rapid scaling, Miro's System team focused on monitoring the median task completion time (percentile) rather than averages or queue sizes. This approach helped them optimize processes that impacted the majority of users.

Common Bottleneck Sources and Their Effects

Understanding bottlenecks is crucial for targeting monitoring efforts and speeding up response times. Different bottlenecks leave distinct traces, which can help you pinpoint and resolve issues effectively.

Here’s a breakdown of the most frequent bottleneck sources, their warning signs, detection methods, and how they limit scalability:

Diving Deeper Into Bottlenecks

CPU bottlenecks occur most often during traffic surges. When CPU usage exceeds 80%, the system starts queuing requests, leading to delays and timeouts. At this point, horizontal scaling often becomes the only viable solution.

Memory issues tend to be silent until RAM usage approaches critical levels. Once that happens, applications may crash or slow significantly due to garbage collection overloads, forcing expensive upgrades or optimization efforts.

Database bottlenecks are a common challenge in scaling web applications. Symptoms like query timeouts and exhausted connection pools can cripple performance, often requiring database clustering or the addition of read replicas to distribute the load.

Network constraints typically show up when dealing with large files or frequent API calls. High latency or packet loss, especially across different regions, often signals the need for content delivery networks (CDNs) or other distribution strategies.

Storage bottlenecks arise as data demands increase. Traditional drives with limited IOPS can slow down file operations and database writes, making SSDs or distributed storage architectures critical for maintaining performance.

Application code bottlenecks are unique because they stem from inefficiencies in design or implementation, such as memory leaks or poor caching strategies. Fixing these issues often requires in-depth profiling, refactoring, or even reworking the architecture to handle scaling demands.

Addressing Bottlenecks for Better Scalability

Hardware bottlenecks like CPU and memory can sometimes be mitigated with vertical scaling, but this approach has limits. Eventually, horizontal scaling becomes unavoidable. On the other hand, database and application code bottlenecks typically require optimization work before additional resources can be fully effective.

Fixing Bottlenecks for Better Scaling

Once bottlenecks are identified, the next step is to address them effectively. The goal is to tackle the root causes rather than just the symptoms, ensuring your infrastructure can handle future growth without running into the same problems.

Fixing Identified Bottlenecks

CPU bottlenecks: If CPU usage regularly exceeds 80%, it’s time to act. Start by optimizing your code - streamline inefficient algorithms and reduce resource-heavy operations. While upgrading your hardware (vertical scaling) can provide immediate relief, it’s only a temporary fix. For long-term scalability, implement load balancing and horizontal scaling to distribute workloads across multiple servers, as a single server will eventually hit its limits.

Memory issues: Use profiling tools to detect memory leaks and optimize how your application allocates memory. Upgrading your RAM is a good short-term solution, but for better scalability, consider designing stateless applications. These distribute memory loads across multiple instances, making your system more resilient.

Database bottlenecks: Slow queries are often the culprit. Optimize them and add appropriate indexes to speed things up. Other strategies include using connection pooling, setting up read replicas to distribute query loads, and sharding databases for write-heavy applications. Upgrading to NVMe SSDs can also provide a significant performance boost.

Network constraints: If your network is struggling, consider upgrading your bandwidth and using CDNs to reduce the distance data needs to travel. Compress responses and minimize payload sizes to make data transfers more efficient. For global audiences, deploying servers in multiple geographic locations can help reduce latency.

Storage bottlenecks: Replace traditional hard drives with SSDs to handle higher IOPS (input/output operations per second). For more efficient storage management, use distributed storage systems and separate workloads - for example, high-performance storage for databases and standard storage for backups.

These strategies work best when paired with a hosting environment that supports scalability.

Using Scalable Hosting Solutions

Modern hosting infrastructure is a key component in resolving and preventing bottlenecks. FDC Servers offers hosting options tailored for scalability challenges, such as unmetered dedicated servers that eliminate bandwidth limitations and VPS solutions powered by EPYC processors with NVMe storage for peak performance.

Their dedicated server plans, starting at $129/month, are highly customizable. With root access and the ability to modify hardware, you can address performance issues without being locked into rigid hosting plans. Plus, unmetered bandwidth ensures network bottlenecks won’t slow you down.

For workloads requiring advanced processing power, GPU servers (starting at $1,124/month) provide the resources needed for AI, machine learning, and other intensive applications. These servers also come with unmetered bandwidth and customizable configurations to meet specific demands.

To tackle network latency, global distribution is key. FDC Servers operates in over 70 locations worldwide, allowing you to deploy servers closer to your users for faster response times. Their CDN services further enhance content delivery with optimized global points of presence.

Need resources fast? Their instant deployment feature lets you scale up quickly, avoiding delays in hardware provisioning. This is especially useful for handling sudden traffic surges or addressing performance issues on short notice.

Incorporating these hosting solutions can significantly improve your ability to overcome bottlenecks and prepare for future growth.

Ongoing Monitoring and Review

Continuous monitoring is essential to ensure that your fixes remain effective over time. Set up automated alerts for key metrics, such as CPU usage exceeding 75%, memory usage above 85%, or response times that surpass acceptable thresholds.

Schedule monthly performance reviews to track trends and spot emerging issues. Keep an eye on growth metrics and anticipate when your current resources might fall short. By planning upgrades proactively, you can avoid costly emergency fixes that disrupt user experience.

Regular load testing is another critical step. Test your system under expected peak loads and simulate sudden traffic spikes to ensure your fixes can handle real-world conditions. Gradual load increases and stress tests can reveal hidden vulnerabilities before they become problems.

Finally, document every bottleneck incident and its resolution. This creates a valuable knowledge base for your team, making it easier to address similar issues in the future. Tracking the effectiveness of your solutions will also help refine your strategies over time, ensuring your infrastructure stays robust as your needs evolve.

Conclusion

To tackle scaling challenges effectively, start by establishing clear baselines and monitoring your system consistently. Begin by measuring key metrics like CPU usage, memory, disk I/O, and network throughput to understand your system’s typical performance. These benchmarks will help you pinpoint abnormalities when they arise.

Leverage real-time dashboards and historical data to detect and resolve issues before they disrupt user experiences. Tools such as load testing and log analysis are invaluable for assessing performance under stress and identifying weak points in your infrastructure. Common bottlenecks like CPU overload, memory leaks, database slowdowns, network congestion, and storage limitations require specific, targeted solutions.

However, fixing bottlenecks isn’t enough on its own. The real game-changer lies in proactive monitoring and scalable infrastructure. A system designed to adapt to increasing demand ensures long-term reliability, preventing recurring issues. Modern hosting options, such as FDC Servers, offer scalable solutions with rapid deployment and a global network spanning 70+ locations. This flexibility allows you to address performance issues quickly without waiting for new hardware.

The secret to successful scaling is staying vigilant. Set up automated alerts, perform regular performance checks, and keep detailed records of past bottlenecks for future reference. Remember, scaling isn’t a one-time task - it’s an ongoing process that evolves alongside your infrastructure and user needs. With the right combination of monitoring, tools, and scalable hosting solutions, you can build a system that not only meets today’s demands but is also ready for tomorrow’s growth.

FAQs

What are the best ways to resolve database bottlenecks when scaling servers?

To tackle database bottlenecks when scaling servers, start by spreading traffic more evenly. This can be done with tools like load balancers or caching layers, which help ease the pressure on your database. Keep a close eye on key metrics using monitoring tools - track things like response times, error rates, CPU usage, memory, disk I/O, and network activity to identify issues before they escalate.

For storage and performance challenges, consider scaling solutions such as vertical scaling (upgrading your hardware), horizontal scaling (adding more servers), or database sharding. You can also improve efficiency by optimizing database queries and ensuring proper indexing. By staying proactive with monitoring and fine-tuning, you’ll keep your system running smoothly as your servers grow.

How can I tell if my server's performance issues are caused by hardware limitations or inefficient application code?

To figure out whether your server's sluggish performance is due to hardware limits or poorly optimized application code, start by keeping an eye on key system metrics like CPU usage, memory consumption, disk I/O, and network activity. If these metrics are consistently maxed out, it’s a strong sign that your hardware might be struggling to keep up. However, if the hardware metrics seem fine but applications are still lagging, the problem could be buried in the code.

Performance monitoring tools and server logs are your go-to resources for digging deeper. Check for clues like slow database queries, inefficient loops, or processes that hog resources. Routine testing and tuning are crucial to ensure your server can handle growth and perform smoothly as demands increase.

What are the advantages of real-time monitoring tools over using historical data for managing server scalability?

Real-time monitoring tools are a game-changer when it comes to keeping systems running smoothly. They deliver instant alerts and actionable insights, helping you tackle issues as they happen. This kind of immediate feedback is key to avoiding performance hiccups during server scaling. Plus, it ensures your resources are allocated efficiently, which is crucial for managing ever-changing workloads.

Meanwhile, historical data analysis shines when it comes to spotting long-term trends or figuring out the root causes of past problems. But there's a catch - if you rely only on historical data, you might miss the chance to act quickly on current issues. That delay could lead to downtime or performance bottlenecks. While both methods have their place, real-time monitoring is indispensable for making quick adjustments and keeping servers performing at their best in fast-paced environments.

How to install and use Redis on a VPS

Learn how to install and configure Redis on a VPS for optimal performance, security, and management in your applications.

9 min read - January 7, 2026

Monitoring your Dedicated server or VPS, what are the options in 2025?

12 min read - November 28, 2025

Have questions or need a custom solution?

Flexible options

Global reach

Instant deployment

Flexible options

Global reach

Instant deployment